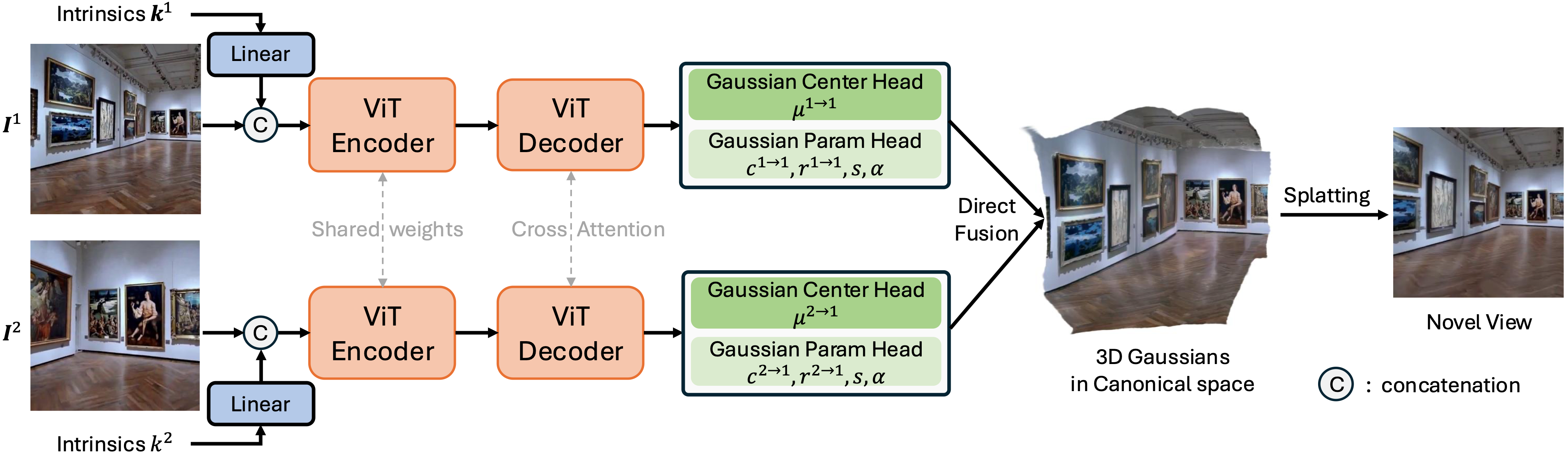

Overall Framework of NoPoSplat. Given sparse unposed images, our method directly reconstruct Gaussians in a canonical space from a feed-forward network to represent the underlying 3D scene. We also introduce a camera intrinsic token embedding, which is concatenated with the image tokens as input to the network to address the scale ambiguity problem. For simplicity, we use a two-view setup as an example.

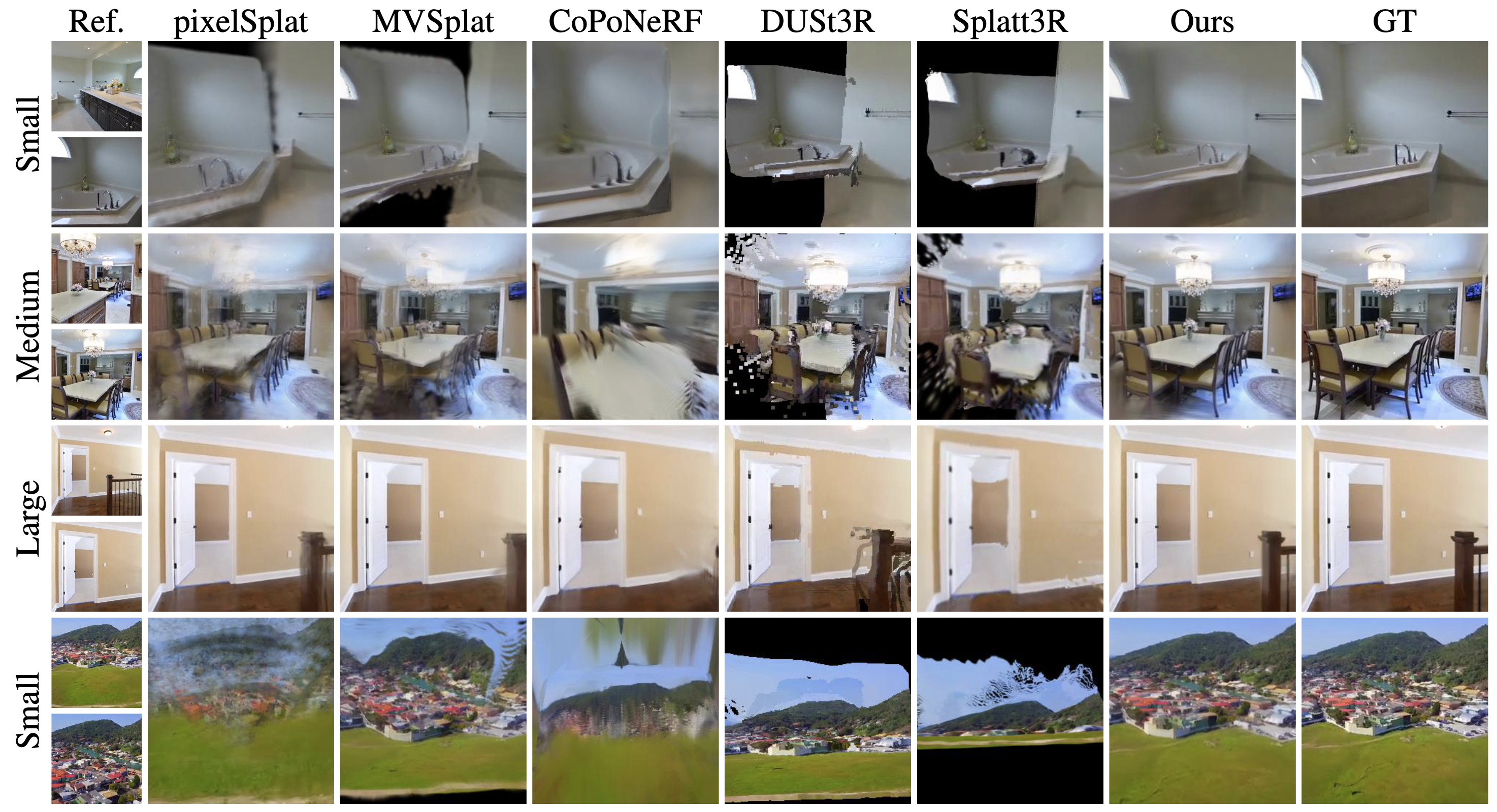

The red and green indicate input and target camera views, and the rendered image and depths are shown on the right side. The magenta and blue arrows correspond to the distorted or misalignment regions in baseline 3DGS. The results show that even without camera poses as input, our method produces higher-quality 3D Gaussians resulting in better color/depth rendering over baselines.

Compared to baselines, we obtain: 1) more coherent fusion from input views, 2) superior reconstruction from limited image overlap, 3) enhanced geometry reconstruction in non-overlapping regions.

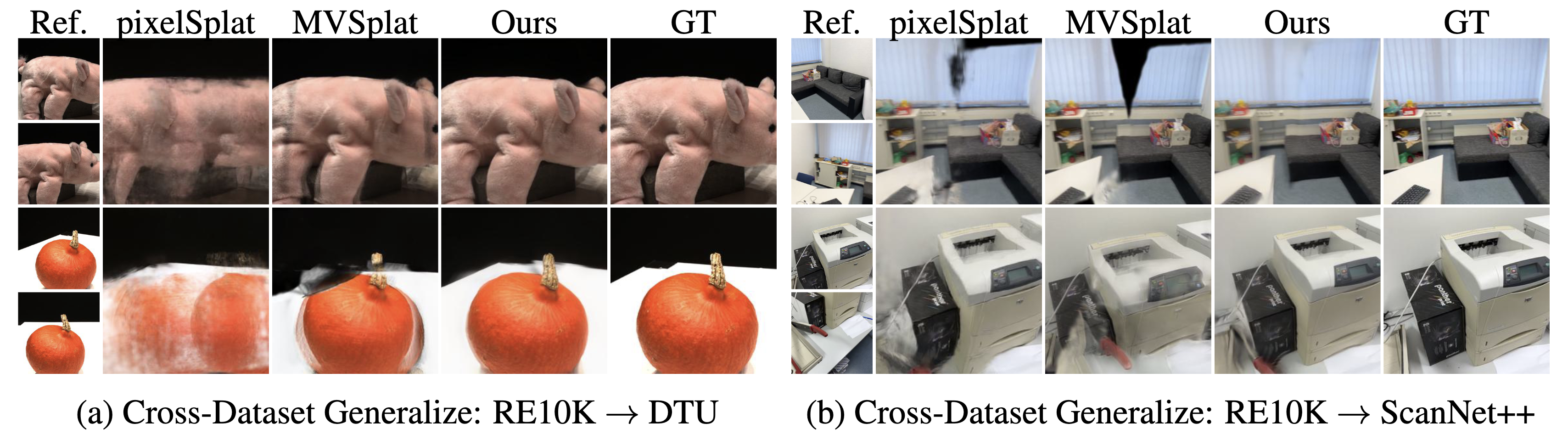

Our model can better zero-shot transfer to out-of-distribution data than SOTA pose-required methods. MVSplat and pixelSplat struggle to smoothly merge the underlying geometry and appearance of different input views, whereas our NoPoSplat renders competitive and holistic novel views due to the design that outputs Gaussians in a canonical coordinate system

@article{ye2024noposplat,

title = {No Pose, No Problem: Surprisingly Simple 3D Gaussian Splats from Sparse Unposed Images},

author = {Ye, Botao and Liu, Sifei and Xu, Haofei and Xueting, Li and Pollefeys, Marc and Yang, Ming-Hsuan and Songyou, Peng},

journal = {arXiv preprint arXiv:2410.24207},

year = {2024}

}